From the Terminal

keyd on Gentoo with OpenRC and KDE Plasma

git clone https://github.com/rvaiya/keyd

cd keyd

make && sudo make install

touch /etc/init.d/keyd

nano /etc/init.d/keydPop this in there and save.

#!/sbin/openrc-run

# Description of the service

description="keyd key remapping daemon"

# The location of the keyd binary

command="/usr/local/bin/keyd"

#command_args="-d"

pidfile="/run/keyd.pid"

depend() {

need localmount

after bootmisc

}

start() {

ebegin "Starting keyd"

start-stop-daemon --start --exec $command --background --user root --make-pidfile --pidfile $pidfile --

eend $?

}

stop() {

ebegin "Stopping keyd"

start-stop-daemon --stop --pidfile $pidfile

eend $?

}

restart() {

ebegin "Restarting keyd"

start

eend $?

}sudo rc-update add keyd default

/etc/init.d/keyd start

sudo setfacl -m u:USERNAME:rw /var/run/keyd.socket

If you want application specific key binds you need to run /usr/local/bin/keyd-application-mapper inside your KDE Plasma session. To do that simply add it to your autostart through the System Settings application.

Port forwarding a database through a VPN with Docker and Nginx

This goes into your docker-compose.yml

version: '3.9'

services:

openvpn-client:

image: ghcr.io/wfg/openvpn-client

container_name: openvpn-client

cap_add:

- NET_ADMIN

devices:

- /dev/net/tun

volumes:

- ./.docker/openvpn:/config # place config.ovpn file into this folder

#network_mode: container:openvpn-client

#restart: unless-stopped

environment:

- CONFIG_FILE=config.ovpn

- ALLOWED_SUBNETS=192.168.0.0/24,192.168.1.0/24

# - AUTH_SECRET=credentials.txt

networks:

front-tier:

ipv4_address: 172.25.0.7

proxy:

image: nginx:1.25.1

container_name: db-proxy

network_mode: container:openvpn-client

volumes:

- ./.docker/proxy/nginx/:/etc/nginx/

- ./.docker/proxy/logs/:/var/log/nginx/

depends_on:

- openvpn-client

networks:

front-tier:

ipam:

driver: default

config:

- subnet: "172.25.0.0/24"Make sure to create the folders .docker/proxy/nginx/ and .docker/proxy/logs/.

Place this into .docker/proxy/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

# This is where all http server configs go.

}

stream {

server {

listen 5432;

proxy_connect_timeout 60s;

proxy_socket_keepalive on;

proxy_pass 10.0.3.11:5432;

}

}Just make sure to set proxy_pass to the destination.

After running docker-compose up you'll be good to go.

Configuring an RTMP streaming server for VRChat with Nginx

I recently picked up a Valve Index and I've started to explore the music community

One of the most compelling aspects of VRChat as a platform is how you can use it as a way of discovering music. A vast and diverse community of individuals with varied musical tastes and backgrounds already exists. In VRChat, you can attend music festivals, explore custom-built music worlds, and interact with other users who share your passion for various musical genres. This environment encourages discovery and exposes you to a wide range of genres, artists, and tracks that you may not have encountered otherwise. It's a melting pot of musical creativity waiting to be explored.

Moreover, VRChat offers a unique opportunity for musicians, DJs, and producers to hone their craft and experiment with performing, mixing, and DJing in a virtual setting. Through the integration of streaming music into VRChat, you can curate and share playlists, perform live DJ sets, and even create your own virtual music venues. The interactive nature of VRChat enables you to receive immediate feedback from your audience, allowing you to refine your skills, experiment with new techniques, and build a dedicated following.

While Twitch and YouTube have transformed the streaming landscape, they do have limitations that can impact the quality and control of your stream. These platforms often inject ads into your content, disrupting viewer engagement, and they impose restrictions on bitrate, limiting the stream's video quality. To overcome these challenges, many streamers are turning to Nginx for RTMP. By configuring Nginx to stream directly to your audience, you regain control over your stream, eliminate unwanted ads, and achieve higher bitrates for an uninterrupted, high-quality viewing experience.

This reference should let you quickly set up a public facing site.

nano /etc/nginx/sites-enabled/stream.conf server {

listen 443 ssl;

server_name stream.yourdomain.example;

ssl_certificate /etc/letsencrypt/live/stream.yourdomain.example/fullchain.pem; # managed by Certbot

ssl_certificate_key /etc/letsencrypt/live/stream.yourdomain.example/privkey.pem; # managed by Certbot

include sites-available/include-ssl.conf;

server_tokens off;

# rtmp stat

location /stat {

rtmp_stat all;

rtmp_stat_stylesheet stat.xsl;

}

location /stat.xsl {

root /var/www/stream/rtmp;

}

# rtmp control

location /control {

rtmp_control all;

}

location /hls {

# Serve HLS fragments

types {

application/vnd.apple.mpegurl m3u8;

video/mp2t ts;

}

root /tmp;

add_header Cache-Control no-cache;

add_header 'Access-Control-Allow-Origin' '*' always;

add_header 'Access-Control-Expose-Headers' 'Content-Length';

if ($request_method = 'OPTIONS') {

add_header 'Access-Control-Allow-Origin' '*';

add_header 'Access-Control-Max-Age' 1728000;

add_header 'Content-Type' 'text/plain charset=UTF-8';

add_header 'Content-Length' 0;

return 204;

}

}

}

Make sure to add this global rtmp config.

sudo nano /etc/nginx/rtmp.confrtmp {

server {

listen 1935; # this is the port, you may change it if necessary.

chunk_size 4096;

application live { # "live" may be changed to whatever you'd like, this will affect the URLs we use later, though.

live on;

allow publish 0.0.0.0; # put your IP here.

deny publish all; # denied if none of the above apply.

# -- HLS --

hls on;

hls_path /tmp/hls; # this is where all of HLS's files will be stored.

# They are a running replacement so disk space will be minimal.

# Adjust other HLS settings if necessary to level it out if it's a problem.

hls_playlist_length 60s;

hls_fragment 1s;

# optionally,

hls_continuous on;

}

}

}

Add this to the bottom of /etc/nginx/nginx.conf

include rtmp.conf;The URL will actually be rtmp://stream.yourdomain.example/live/random-stream-key but in OBS we will actually put in rtmp://stream.yourdomain.example/live and the second field in OBS is our key.

Finally the stream link we will put into VRC will look like this.

https://stream.yourdomain.example/live/random-stream-key.m3u8

PHP attributes are so awesome I just had to add attribute based field mapping to my ORM

I wrote this post to talk about the architectural decisions I had to make in upgrading my ORM recently to PHP8 as well as the general justification for using an ORM in the first place as a query writer, runner, and object factory and why I consider attributes to be the holy grail of ORM field mapping in PHP.

Writing Queries

I've always been fascinated with moving data in and out of a database. I've been at this from PHP4 days, you know. As hard as it is to believe even back then we had a testable, repeatable, and accurate way of pushing data into and out of a database. I quickly found myself writing query after query.... manually. At the time there was no off the shelve framework you could just composer require. So I found myself thinking about this problem again and again and again and again.

Put simply when you write SQL queries you are mapping variable values in memory to a string. If you are one of those people that claims that an ORM is bloat you'll find yourself writing hundreds of queries all over your projects.

If you've ever imploded an array by a "','" but then ended up doing it in dozens or hundreds or thousands of places the next logical step is to stop rewriting your code and write a library.... and hence an ORM is born. An ORM is an absolute statement; that "I will not rewrite code for handling a datetime" and then actually following through.

To make this happen you must write some sort of translation layer from PHP types to SQL types. In most ORMs this is called mapping.

Before PHP8 the typical thing to do was to use a PHP array defined statically containing all that information. Like this. In fact this is from Divergence from before version 2.

public static $fields = [

'ID' => [

'type' => 'integer',

'autoincrement' => true,

'unsigned' => true,

],

'Class' => [

'type' => 'enum',

'notnull' => true,

'values' => [],

],

'Created' => [

'type' => 'timestamp',

'default' => 'CURRENT_TIMESTAMP',

],

'CreatorID' => [

'type' => 'integer',

'notnull' => false,

],

];But this kinda sucks. There's no way to do any auto complete and it's just generally a little bit slower.

A few frameworks decided to support mapping using annotations (an extended PHPDoc in PHP comments) and even yaml field maps but those are all just bandaids on the real problem. Which was that there was no real way to provide proper field definitions using the raw language's tokens, instead relying on the runtime to store and process that information.

Attributes

So that's my long winded explanation about why my absolute favorite feature of PHP 8 is attributes. Specifically for ORM field mapping. Yea really.

#[Column(type: "integer", primary:true, autoincrement:true, unsigned:true)]

protected $ID;

#[Column(type: "enum", notnull:true, values:[])]

protected $Class;

#[Column(type: "timestamp", default:'CURRENT_TIMESTAMP')]

protected $Created;

#[Column(type: "integer", notnull:false)]

protected $CreatorID;This is sooooo much cleaner. Look how awesome it is. Suddenly I have auto complete for field column definitions right in my IDE!

Now the code is cleaner and easier to follow! You've even got the ability to type hint all your fields.

Once we take field mapping to it's logical conclusion it becomes practical to simply map as many database types as we can into the ORM. We can even try to support all of the various field types that could be used in SQL and represented somehow in PHP. Of course to make this happen it becomes necessary to tell the framework some details about the database field type you decide to use. For example you can easily see yourself using a string variable type for varchar, char, text, smalltext, blob, etc but most ORMs aren't typically smart enough to warn you when you inevitably make a mistake and try to save your larger than 256 character string to a varchar(255). If you were to build all of this yourself you would invariably find yourself creating your own types for your ORM and doing a translation from language primitive to database type and back just like I am here. This gets even more complex when an ORM decides to support multiple database engines. Once this becomes more fleshed out you can even have your ORM write the schema query and automatically create your tables from your code.

Here for example I'm gonna go ahead and create a Tag class and then save something.

class Tag extends Model {

public static $tableName = 'tags';

protected $Tag; // this will be a varchar(255) in MySQL, a string in PHP

}

$myTag = new Tag();

$myTag->Tag = 'my tag';

$myTag->save();

With these few simple lines of code we've created a tags table and saved a new Tag "my tag" into our new table. The framework automatically detected the table was missing during the save and created it and then followed through by running the original save. Excellent for bootstrapping new projects or installing software.

Protected? Magic Methods

Traditionally it's common to think that __get and __set are triggered only when a property is undefined. However it is also triggered if you try to access a protected property from outside of the model. When you access a protected attribute from outside of the object it will always trigger __get when retrieving and __set when setting. For this reason I decided to use protected attributes in a Model for mapping.

The way in and out allows us to do some type casting. For example Divergence supports reading a timestamp in Y-m-d H:i:s, unix timestamp, and if it's a string that isn't Y-m-d H:i:s it will try running it through strtotime() before giving up. But that will only ever happen when __set is called thereby starting the chain of events leading to the necessary type casting for that field type. Unfortunately the downside to using a protected in this context is that when you need to access a field inside the object you can't use $this->Tag = 'mytag'; because it won't trigger __set and so what's gonna happen is you will end up messing with the internal data of that field incorrectly. So for the specific context where you're working with the field directly inside of the object itself you should actually use setValue and getValue instead. Frankly you can use __set and __get directly but let's be civilized here. This caveat is why I would like to see the ability for PHP to have __set and __get triggerables configurable to do so on existing object members.

Relationships.

But wait. There's more! Now with the ability to use Attributes for field definitions we can use them for relationship definitions as well.

#[Relation(

type: 'one-one',

class: User::class,

local: 'CreatorID',

foreign: 'ID'

)]

protected $Creator;

#[Relation(

type: 'one-many',

class: PostTags::class,

local: 'ID',

foreign: 'BlogPostID'

)]

protected $Tags;Relationships take Attributes to the next level.

$this->getValue('Tags');This is all you need to pull all the Tags as an array. Most relationship types can be easily expressed in this format.

$Model->Tags // also works but only from "outside"You can run it like this as well from outside of the Model.

PHP in 2023 is blindingly fast.

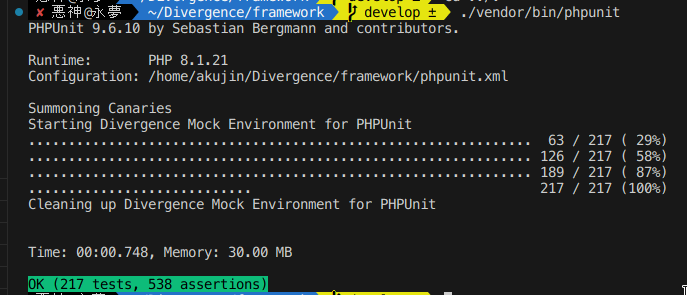

Today the entire suite of tests written for Divergence covering 82% of the code completes in under a second. Some of these tests are generating thumbnails and random byte strings for mock data.

For reference in 2019 the same test suite clocked in at 8.98 seconds for only 196 tests. By the way these are database tests including dropping the database and creating new tables with data from scratch. The data is randomized on every run.

Performance

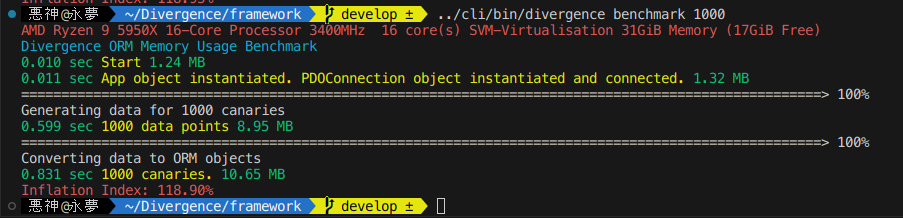

What you are seeing is [time since start] [label] [memory usage] at print time.

The first part where it generates 1000 data points is all using math random functions to generate random data entirely in raw none-framework PHP code. Each Canary has 17 fields meant to simulate every field type available to you in the ORM. Generating all 17 1000 times takes a none trivial amount of time and later when creating objects from this data it must again process 17,000 data points.

Optimizing the memory usage here will be a point of concern for me in future versions of the ORM.

All the work related to what I wrote about in this post is currently powering this blog and is available on Github and Packagist.

Bunny Post

This bunny video brought to you by this code.

How to give a Chromium based browser an isolated custom icon for a seperate profile in Linux

Create a new desktop icon in ~/.local/share/applications.

Use this as a template

[Desktop Action new-private-window]

Exec=chromium-browser --incognito

Name=New Incognito Window

[Desktop Action new-window]

Exec=chromium-browser

Name=New Window

[Desktop Entry]

Actions=new-window;new-private-window;

Categories=Network;WebBrowser;

Comment[en_US]=Access the Internet

Comment=Access the Internet

Exec=chromium-browser --user-data-dir=/home/akujin/.config/chromium-ihub/ --app-id=iHubChromium --class=iHubChromium && xdotool search --sync --classname iHubChromium set_window --class iHubChromium

GenericName[en_US]=Web Browser

GenericName=Web Browser

Icon=/home/akujin/iHub/logo-square.png

MimeType=text/html;image/webp;image/png;image/jpeg;image/gif;application/xml;application/xml;application/xhtml+xml;application/rss+xml;application/rdf+xml;application/pdf;

Name[en_US]=Chromium iHub

Name=Chromium iHub

Path=

StartupNotify=true

StartupWMClass=iHubChromium

Terminal=false

TerminalOptions=

Type=Application

Version=1.0

X-DBUS-ServiceName=

X-DBUS-StartupType=

X-KDE-SubstituteUID=false

X-KDE-Username=

Run update-desktop-database ~/.local/share/applications after any changes to the desktop file.

And there you have it. A custom icon.

Gyudon (Japanese Beef Rice Bowl) 牛丼

Gyudon Sauce for 1/2 lb of rib eye

- ½ cup dashi (Japanese soup stock) - if using Handashi (dry dashi), stir 1/4 tsp in 1/2 cup of hot water

- 1 Tbsp sugar (adjust according to your preference)

- 2 Tbsp sake (substitute with dry sherry or Chinese rice wine; for a non-alcoholic version, use water)

- 2 Tbsp mirin (substitute with 2 Tbsp sake/water + 2 tsp sugar)

- 3 Tbsp soy sauce

Gyudon

- 1/2 lb of ribeye thinly sliced

- A white onion approx 2.5 to 3 inches in diameter, cut thinly into half circles

- Japanese style white rice

- Raw egg

Optional Garnishes

- Seseme seeds

- Green onion

- Grated garlic

- Pickled ginger

- Red pepper

Instructions

Prepare the gyudon sauce by mixing all the ingrediants listed. Put the gyudon sauce into a pan with a lid and allow it to heat up enough that it has started to show a mist from evaporation. Place the onion into the sauce and spread around to allow most of the onion to be touching the pan. Cover with lid and let cook until the onions are soft. Stir a few times. Add the meat by laying out each slice and flipping after a minute or so. Stack to the side as it becomes ready and add more. When all the meat is done stir.

Prepare the bowls by heating them up in the oven at 200 as an optional step. Lay the rice into the bowl with a dip in the center. Lay out the meat and onions over the rice to the outside of the dip. Pop a raw egg over the dip. Add green onion, red pepper, sesame seeds, and grated garlic to taste. Serve quickly & stir quickly so the raw egg is cooked by the rice.

V4L2 Notes for Linux

Find out what is using capture devices

fuser /dev/video0Find out name of process

ps axl | grep ${PID}Show info

v4l2-ctl --allSet resolution and frame rate

v4l2-ctl -v width=1920,height=1080 --set-parm=60

v4l2-ctl -v width=3840,height=2160 --set-parm=30

Reset USB Device

usbreset "Live Streaming USB Device"Show Stream in GUI

qvidcapCreate a virtual video capture device

sudo modprobe v4l2loopback devices=1 video_nr=10 card_label="OBS Cam" exclusive_caps=1Automatically on Kernel Start up

Must install https://github.com/umlaeute/v4l2loopback

media-video/v4l2loopback on Gentoo

echo "v4l2loopback" > /etc/modules-load.d/v4l2loopback

echo "options v4l2loopback devices=1 video_nr=10 card_label=\"OBS Cam\" exclusive_caps=1" > /etc/modprobe.d/v4l2.conf

Run modprobe v4l2loopback after setting it up to load without restarting.

How to make a launcher for Spotify in Linux that works with Spotify links

When launching Spotify in Linux by default the deb package comes with a desktop file that contains an exec that essentially just runs the "spotify" binary. Spotify does have a dbus interface for certain things. We can look for an existing process and if one is found we throw the Spotify link into dbus instead of opening another Spotify instance.

I wrote a simple launcher replacement. Just set your desktop file to use this instead of the default.

| #!/bin/bash | |

| # check if spotify is already running and if so just pass the uri in | |

| if pgrep -f "Spotify/[0-9].[0-9].[0-9]" > /dev/null | |

| then | |

| busline=busline=org.mpris.MediaPlayer2.spotify /org/mpris/MediaPlayer2 org.mpris.MediaPlayer2.Player.OpenUri $1 | |

| echo "Spotify is already running" | |

| echo "Sending ${busline} to dbus" | |

| if command -v qdbus &> /dev/null | |

| then | |

| qdbus $busline | |

| exit | |

| fi | |

| if command -v dbus-send &> /dev/null | |

| then | |

| dbus-send $busline | |

| exit | |

| fi | |

| echo "No bus dispatcher found." | |

| # otherwise launch spotify | |

| else | |

| spotify $1 &>/dev/null & | |

| fi |

Slack magic login broken on Linux with KDE

What's going on?

kde-open5 is breaking the links by making all characters lowercase.

Get the link by running:

while sleep .1; do ps aux | grep slack | grep -v grep | grep magic; done

Then just run `xdg-open [the link]`

The link should look like this:

slack://T8PPLKX2R/magic-login/3564479012256-b761e747066f87b708f43d5c0290bb076f276b121486a5fb6376af0dd7169e7d